Tech

September 12, 2025

Perfect Lip Sync: Why It Matters – and Why Most Tools Fail

From "good enough" to truly synchronized

In the past, Lip Sync meant trying to cut and time a translated audio track to roughly match the speaker’s mouth movements.

It was always a workaround – and rarely convincing.

Today, things have changed.

Thanks to AI, we can now actively adjust the lip movements in the original video to match the translated audio – precisely, naturally, and without visual glitches.

Instead of post-production tricks, we now get results that look and feel like the video was recorded in the new language. But only if it’s done properly.

What is Lip Sync, really?

Lip Sync (short for “lip synchronization”) is the alignment between the spoken audio and the visible mouth movement in a video.

The goal: Viewers should feel like the person on screen is genuinely saying what they’re hearing – regardless of the language.

Achieving that requires more than just timing. It involves:

- Correct articulation (which visible sounds are being formed)

- Sentence rhythm, tone, and pauses

- Facial expressions and movement dynamics

Only when all of this aligns does the result feel real.

Why Lip Sync is so important

Lip Sync is not a nice-to-have – it’s mission-critical for:

- Credibility: Even small mismatches between voice and lips look fake.

- Trust: Especially in leadership videos, learning content, or testimonials.

- Professionalism: Visual dissonance can cheapen even the best message.

- Emotional resonance: Humans instinctively read faces – and if the movement doesn’t match the sound, we disconnect.

If your video shows people talking directly to the camera, strong Lip Sync isn’t optional – it’s essential.

Why 80% Lip Sync isn’t enough

Many tools claim to offer automatic Lip Sync.

But they mostly hit the general rhythm – not the real detail.

- One wrongly timed phoneme? It shows.

- Even a slight lag? Feels robotic.

- Incomplete sentence movement? Looks wrong.

Lip Sync is binary: It’s either perfect, or it doesn’t work.

There’s no room for "close enough" when it comes to faces – we notice every tiny inconsistency.

{{cta}}

How Dubly.AI delivers real Lip Sync

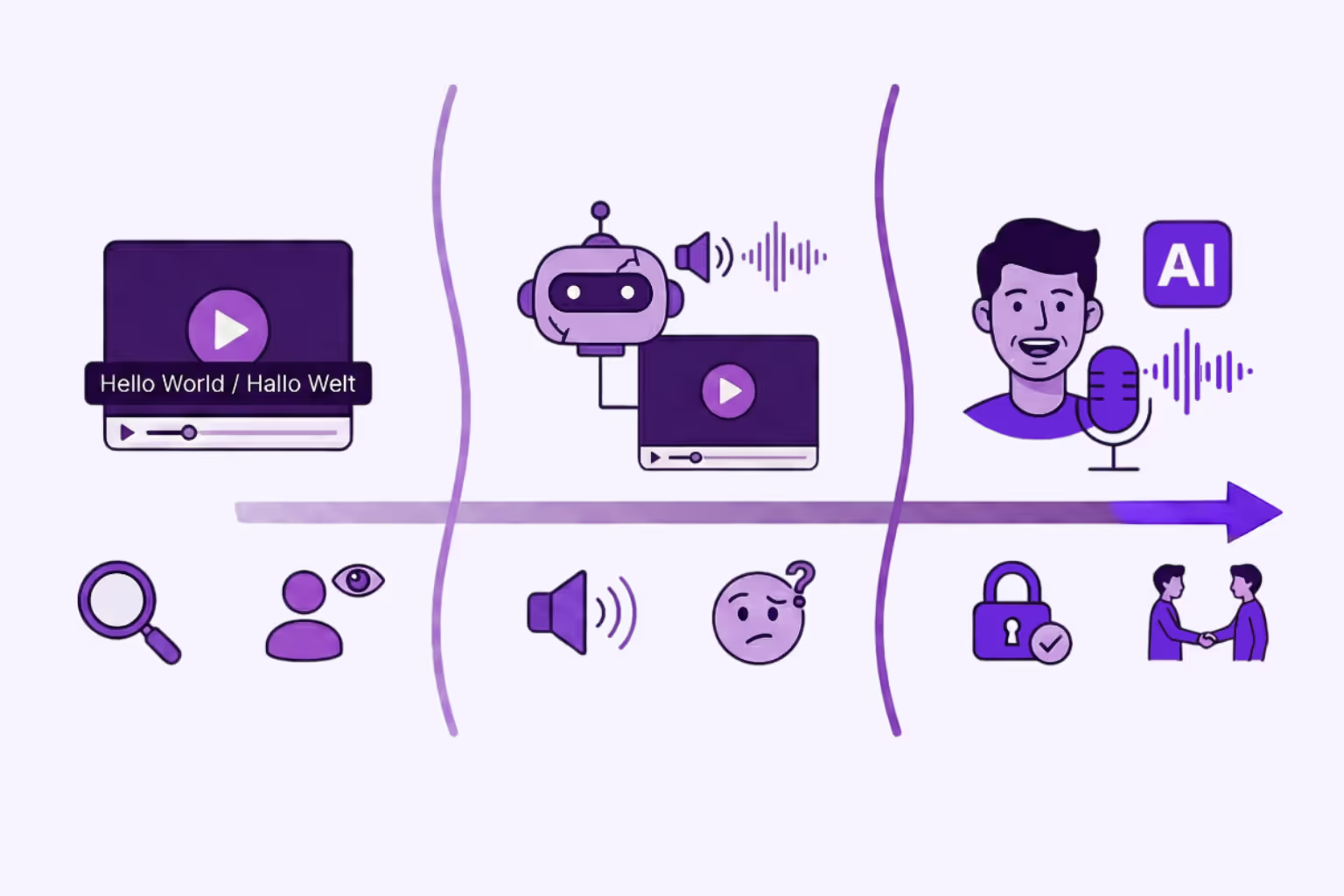

At Dubly, Lip Sync is applied only at the very end of the translation process – after:

- the video has been translated,

- the voice track optimized,

- and (optionally) Voice Cloning applied.

Then, our system analyzes:

- The original lip movements

- The translated speech (timing, intonation, phonetics)

- Context such as sentence structure and camera angle

Based on this, the lip movements in the video are dynamically adjusted – frame by frame, voice by voice – without altering the rest of the face or visuals.

The result: A natural, visually convincing translation that feels like it was shot in the target language.

Why most tools fail at Lip Sync

A lot of platforms advertise Lip Sync – but:

- Some use avatar overlays or generic animations

- Others rely on fixed rules like "one sound = one mouth shape"

- Many just stretch the audio without touching the visuals

This often leads to an uncanny valley effect. At best, it’s distracting. At worst, it ruins the message.

Dubly does it differently.

We use visual AI built for real human faces – no masks, no avatars, no gimmicks. Just precise, high-quality Lip Sync tailored to your actual footage.

Because even a slight mismatch undermines credibility. In videos where the speaker looks at the camera, or in messages with emotional or trust content, imperfect lip sync causes visual dissonance, reducing engagement, trust, and perceived professionalism.

What do most tools do wrong with lip sync?

Many only approximate timing, stretch audio, or use generic rules like fixed mouth-shapes. Others skip adjusting visual mouth movement entirely. The result is robotic, detached, or “off” feeling lip synchronization.

How does Dubly.AI achieve real lip sync?

Dubly runs lip sync as the final step after translation, voice-track optimization (and optionally voice cloning). The system analyzes original lip movements, translated audio (pronunciation, rhythm, phonetics), and context (sentence structure, camera angle), then dynamically adjusts lips frame by frame without altering other facial features.

In what situations is lip sync especially critical?

When people speak directly to camera, in leadership messages, emotional storytelling, testimonials, ads, international content for platforms like YouTube or social media, or any case where visual authenticity contributes to brand trust.

{{callout}}

Conclusion: Lip Sync is not a feature – it’s the foundation

You can get the translation right. You can have a great voiceover. But if the lips don’t match the message, the illusion breaks.

Dubly.AI delivers true Lip Sync – as the final polish that elevates your translated video to broadcast-ready quality.

It’s the difference between understood and believed.

What is lip sync really?

Lip sync is the precise alignment between spoken audio and mouth movements in video. It means matching not just timing but phonetics, expression, tone, pauses—so that it looks like the person is truly speaking the translated audio.

What do most tools do wrong with lip sync?

Many only approximate timing, stretch audio, or use generic rules like fixed mouth-shapes. Others skip adjusting visual mouth movement entirely. The result is robotic, detached, or “off” feeling lip synchronization.

How does Dubly.AI achieve real lip sync?

Dubly runs lip sync as the final step after translation, voice-track optimization (and optionally voice cloning). The system analyzes original lip movements, translated audio (pronunciation, rhythm, phonetics), and context (sentence structure, camera angle), then dynamically adjusts lips frame by frame without altering other facial features.

In what situations is lip sync especially critical?

When people speak directly to camera, in leadership messages, emotional storytelling, testimonials, ads, international content for platforms like YouTube or social media, or any case where visual authenticity contributes to brand trust.

Über den Autor

Newest articles

Tech

AI Lip Sync Explained: Stop Asynchronous Lips in Video Translations

Asynchronous videos look unprofessional. Learn how AI Lip Sync and Visual Dubbing perfect your translations – GDPR compliant and scalable.

Simon Pieren

December 23, 2025

Use Cases

How to Translate Video Free: 3 Ways to multiply your reach

Looking for an AI video translator? We answer how to translate video free using 3 methods: Subtitles, Basic TTS, and Professional Lipsync.

Simon Pieren

December 8, 2025

Use Cases

Translate Video Free: A Guide to AI Translation, Lip Sync & Voice Cloning

Looking to "translate video free"? Learn the technical differences between standard dubbing and generative AI Lip Sync, and how to test professional quality risk-free.

Simon Pieren

November 28, 2025